![Online Adaptation of Convolutional Neural Networks for Video Object Segmentation [BMVC 17 Oral]](/blog/assets/img/dsfsfdsfdsf.png)

Online Adaptation of Convolutional Neural Networks for Video Object Segmentation [BMVC 17 Oral]

2017, Dec 14

One Line Summary

- Using online adaptation of video object segmentation uses the temporal consistancy as a cue and give better segmentation results

Motivation

- Single frame segmentation is different from the video object segmentation, since the foreground object being segmented can’t change the position drastically from one frame to the next, this information can be exploited to get better results.

Detailed Summary

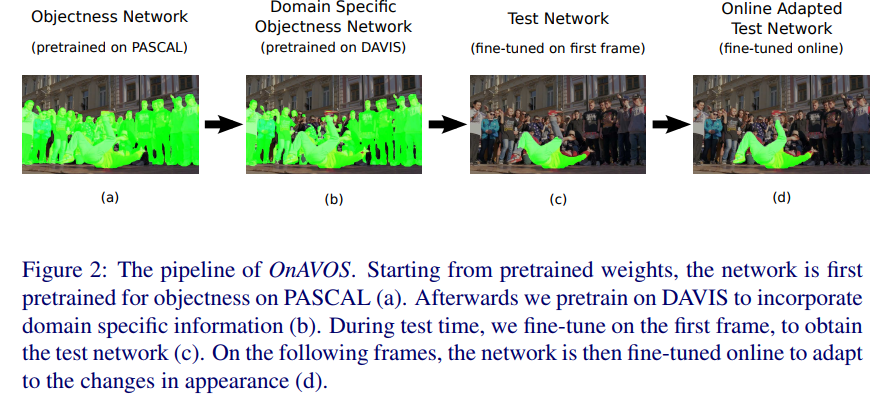

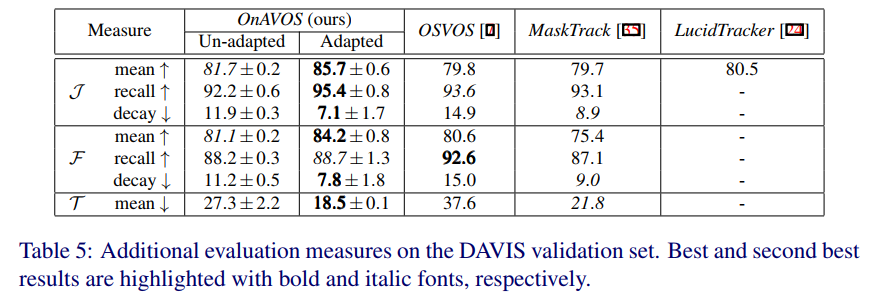

- This work is built on the previous video object segmentation osvos, they used the semi supervised approach, uses the online adaptation step after each frame prediction in the video, currently the state of the art on davis dataset for semi supervised video object segmentation.

Novelty and Contributions

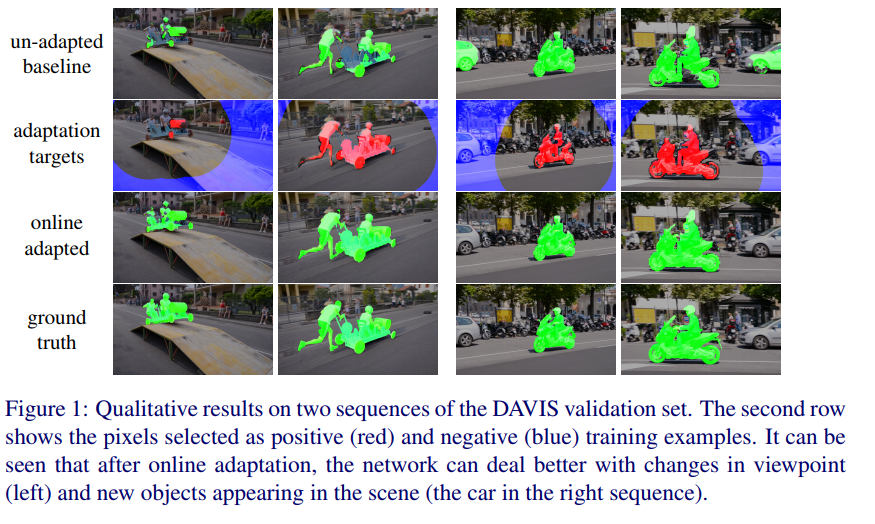

- Uses online adaptation for extracting the temporal consistancy cues.

- Uses the negative samples for hard negative mining

- Mixes the ground truth of the initial frame for better results.

Network Details

- Network has the initial training step to get the overall objectness information, along with that after each frame the network is trained with the predictions of the previous frame to do the online adaptation.

Algorithm

Results

Authors

Paul Voigtlaender, Bastian Leibe