![Multi-Task Learning Using Uncertainty to Weigh Losses for Scene Geometry and Semantics[arXiv May 17]](/blog/assets/img/ddsfdsdsafdsa.png)

Multi-Task Learning Using Uncertainty to Weigh Losses for Scene Geometry and Semantics[arXiv May 17]

2017, Dec 05

One Line Summary

- Deep learning applications benefit from multi-task learning with multiple regression and classification objectives, a principled approach to multi-task deep learning which weighs multiple loss functions is presented.

Motivation

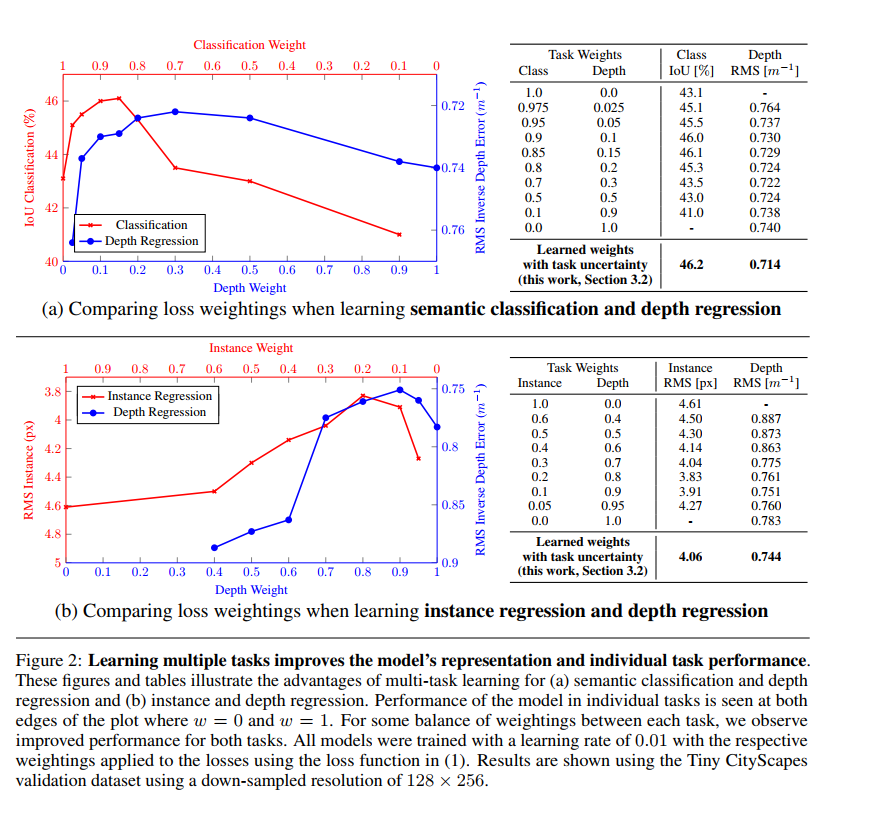

- Performance of multi-task system is strongly dependent on the relative weighting between each task’s loss, tuning these weights by hand is a difficult and expensive process, making multi-task learning prohibitive in practice.

Detailed Summary

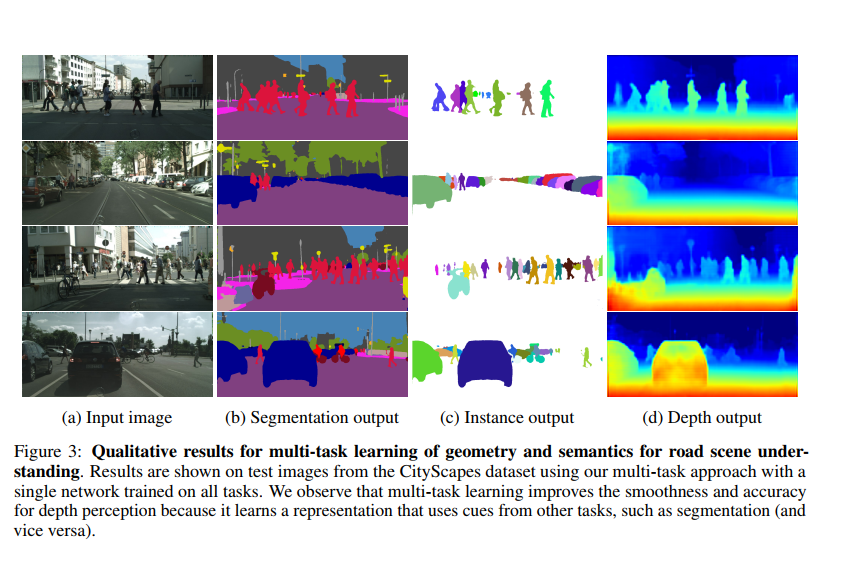

- Multi-task learning aims to improve learning efficiency, generalization and prediction accuracy by learning multiple objectives from a shared representation

- Prior multi-task learning tasks use a naive weighted sum of losses, but the performance is highly sensitive to the weights given to the losses

- This approach uses the learns the weights to the losses for the optimal predictions and efficiency across the tasks.

Novelty and Contributions

- A novel and principled multi-task loss to simultaneously learn various classification and regression losses of varying quantities and units using homoscedastic task uncertainty,

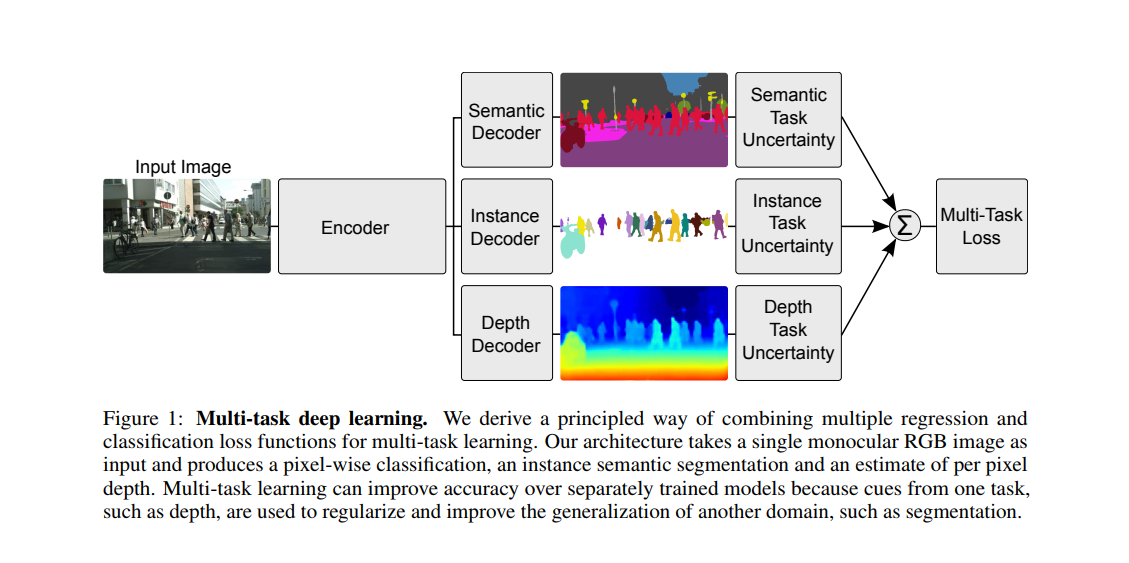

- A unified architecture for semantic segmentation, instance segmentation and depth regression,

- Demonstrating the importance of loss weighting in multi-task deep learning and how to obtain superior performance compared to equivalent separately trained models.

Network Details

- Network uses the same encoder for all three tasks .

- Decoder part is separate for each task

Results

Authors

Alex Kendall, Yarin Gal, Roberto Cipolla