![Generating Adversarial Examples with Adversarial Networks [ICLR 18 Under Review]](/blog/assets/img/ddfsfsafdafas.png)

Generating Adversarial Examples with Adversarial Networks [ICLR 18 Under Review]

One Line Summary

- A targeted adversarial perturbation generation using a generative model.

Motivation

- Adversarial perturbations are shown to fool the state-of-the-art networks, most of the approaches for generating the perturbations use gradient based optimization, this approach uses the neural networks for generating the adversarial perturbations.

Detailed Summary

-

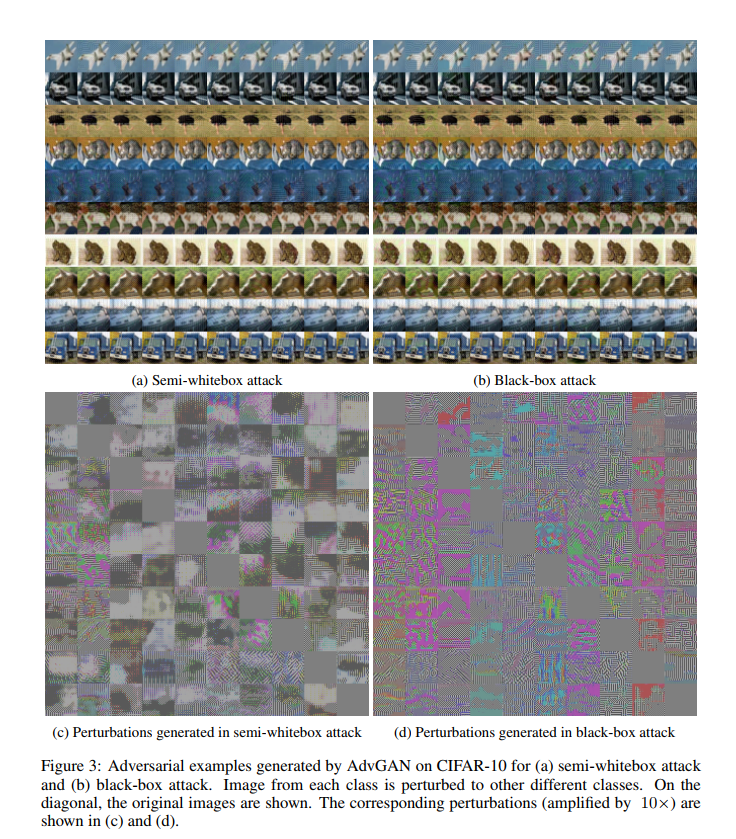

AdvGAN can attack black-box models by training a distilled model. We propose to dynamically train the distilled model with query information and achieve high black-box attack success rate and targeted black-box attack, which is difficult to achieve for transferability-based black-box attacks.

-

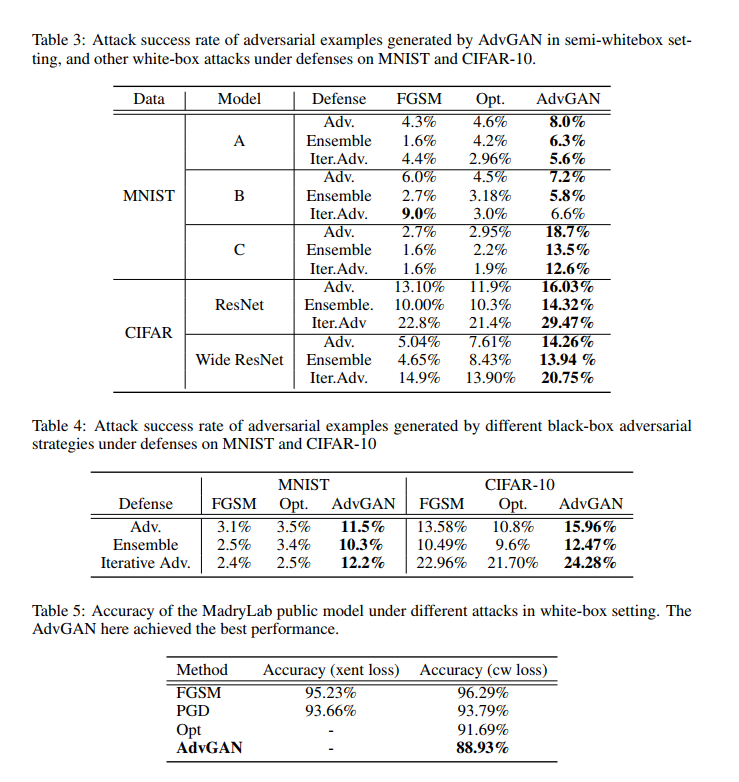

AdvGAN on M ˛adry et al.’s MNIST challenge (2017a) achieved 88.93% accuracy on the published robust model in the semi-whitebox setting and 92.76% in the blackbox setting, which wins the top position in the challenge .

Novelty and Contributions

-

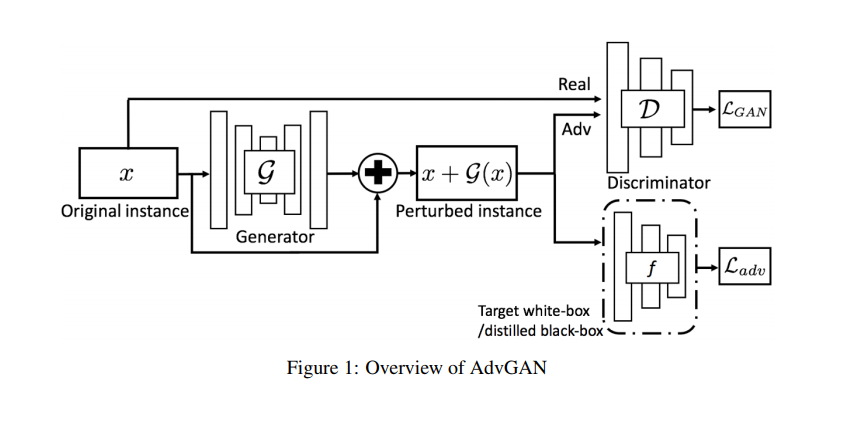

Different from the previous optimization-based methods, a conditional adversarial network to directly produce adversarial examples which are both perceptually realistic and achieve state-of-the-art attack success rate against different target models.

-

We use the state-of-the-art defense methods to defend against adversarial examples and show that AdvGAN achieves much higher attack success rate under current defenses.

Network Details

- Generator takes the input the image and outputs the corresponding perturbaiton.

- image added to the perturbaiton fools the target classifier.

-

Results

Authors

Chaowei Xiao, Bo Li, Jun-Yan Zhu, Warren He, Mingyan Liu, Dawn Song